I've created a test suite to take a Solr schema.xml and run it through its paces. The schema file is used to configure an instance of Solr that is fed a collection of one million documents up to 2,000 words in length each. Each document consists of random text with words chosen with an English-like frequency, and the total corpus is around 6GB in size.

Once the documents have been indexed, the suite simulates query load with 5 concurrent users performing a total of 10,000 queries (2,000 each). The terms in each query are chosen using the same technique as above, with various operators (AND/OR/NOT) thrown in for good measure. I've left out phrase queries for now because my "lean" ngram modifications mean they're no longer supported when searching the ngram field (I'm not sure we want to phrase search ngrams anyway...)

Keeping in mind these are generated by choosing random words with the frequency that people actually use them (NSFW?):

I had intended to benchmark the github/master schema file (with its max ngram length of 15) but indexing performance was really poor (~ 6 documents/second, and at the 12% mark the index size was 25GB!). This worried me enough that I didn't bother finishing the benchmark...

For whatever reason, I've used lean to signify schema changes that don't change the search semantics, but reduce the overall size of the index on disk.

Indexing is performed by two processes POSTing batches of 1,000 documents via the Solr web interface. The indexing process is CPU-bound in all cases tested so far.

| Indexing performance: | 175 documents/second |

|---|---|

| Indexing time: | 5704 seconds |

| Total index size: | 20GB |

| Indexing performance: | 294 documents/second |

|---|---|

| Indexing time: | 3403 seconds |

| Total index size: | 5.4GB |

| Indexing performance: | 608 documents/second |

|---|---|

| Indexing time: | 1643 seconds |

| Total index size: | 8.8GB |

| Indexing performance: | 977 documents/second |

|---|---|

| Indexing time: | 1023 seconds |

| Total index size: | 2.3GB |

[Shares an index with no-ngrams-lean]

| Indexing performance: | 150 documents/second |

|---|---|

| Indexing time: | 6633 seconds |

| Total index size: | 20GB |

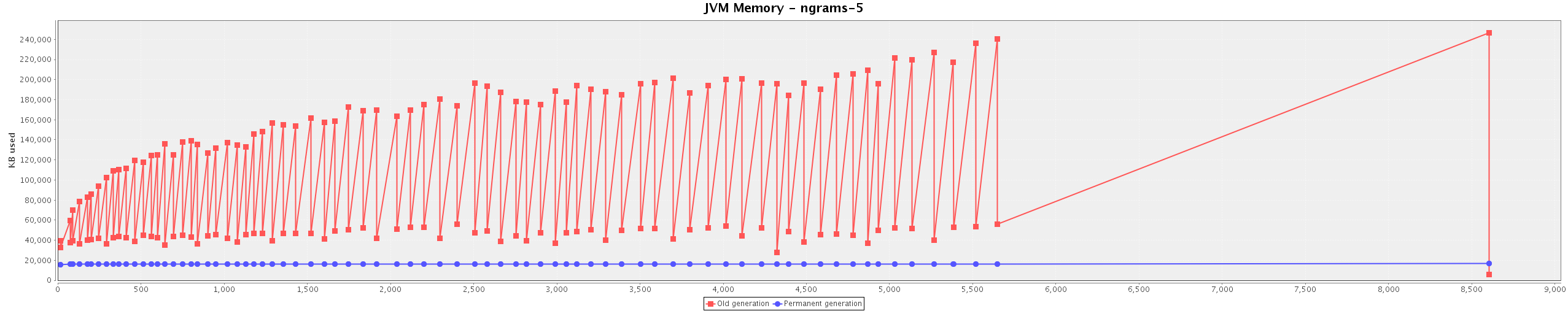

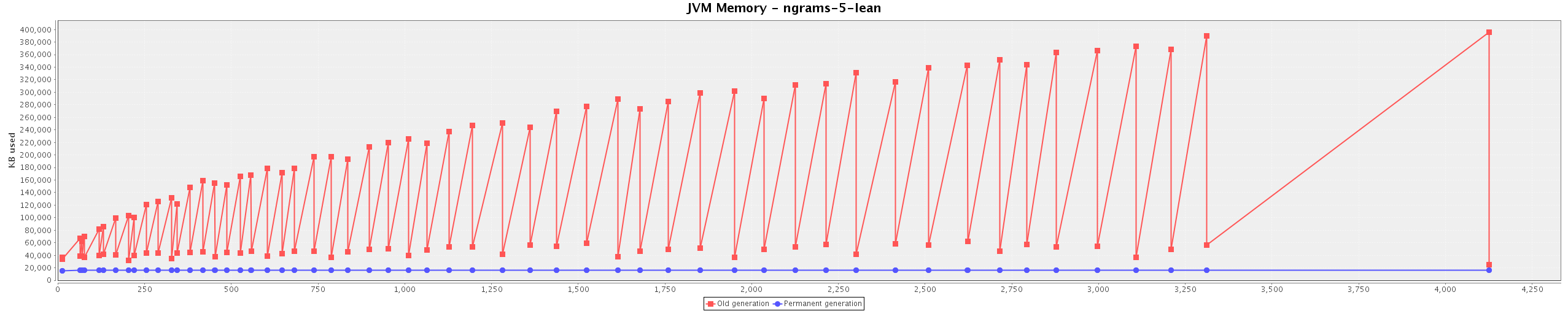

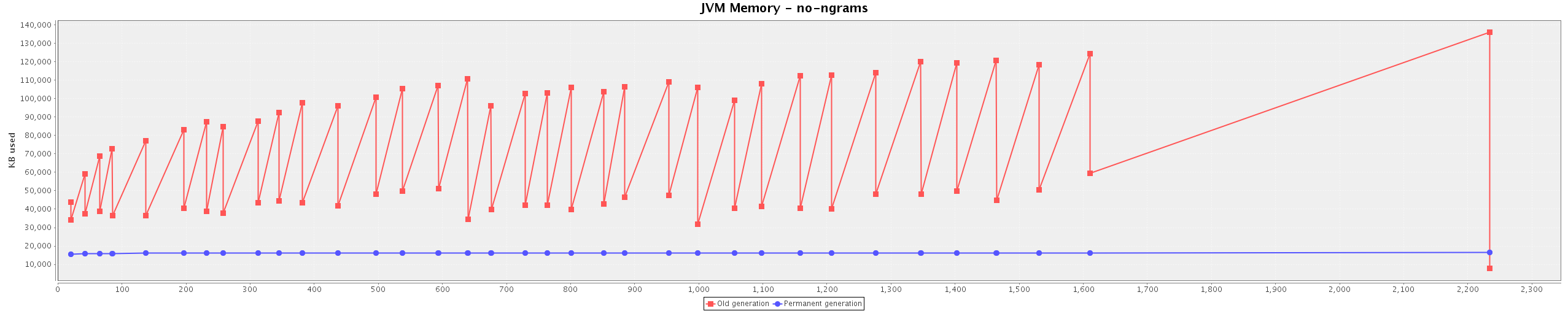

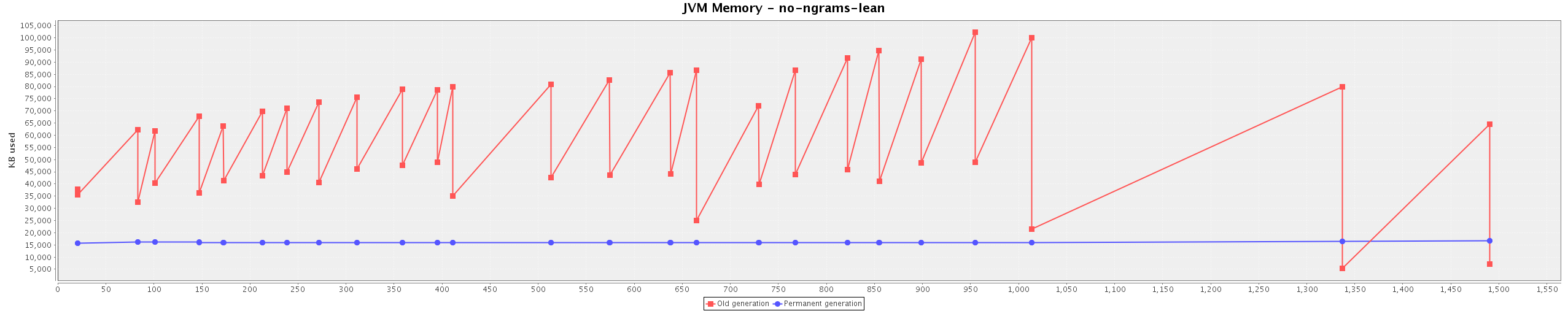

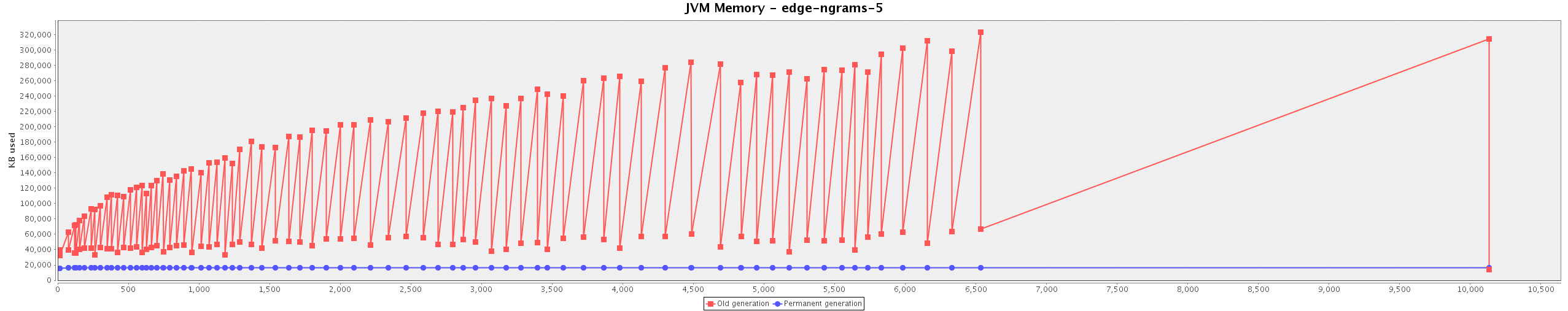

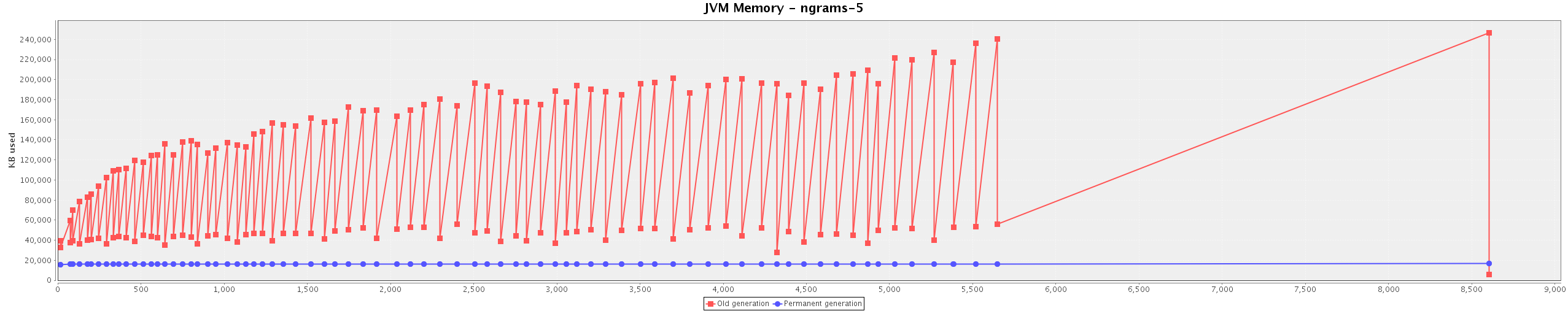

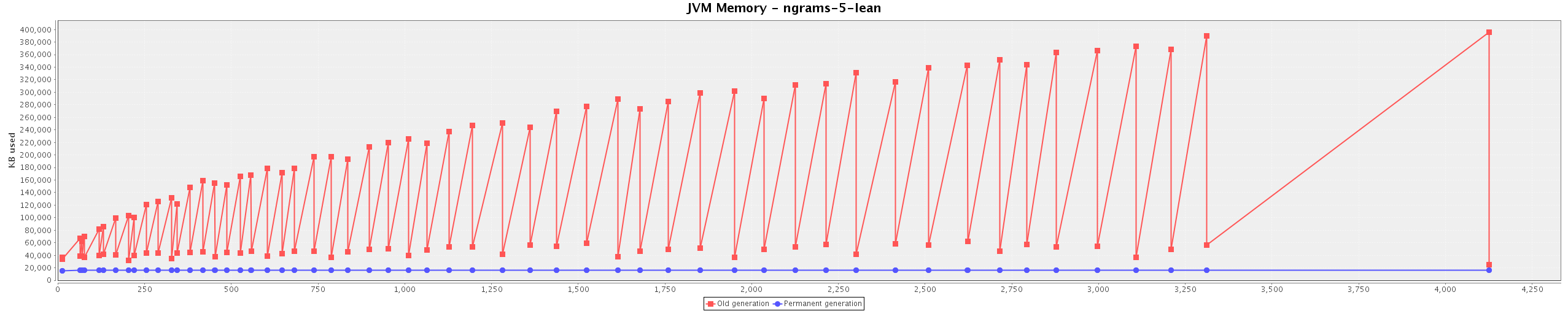

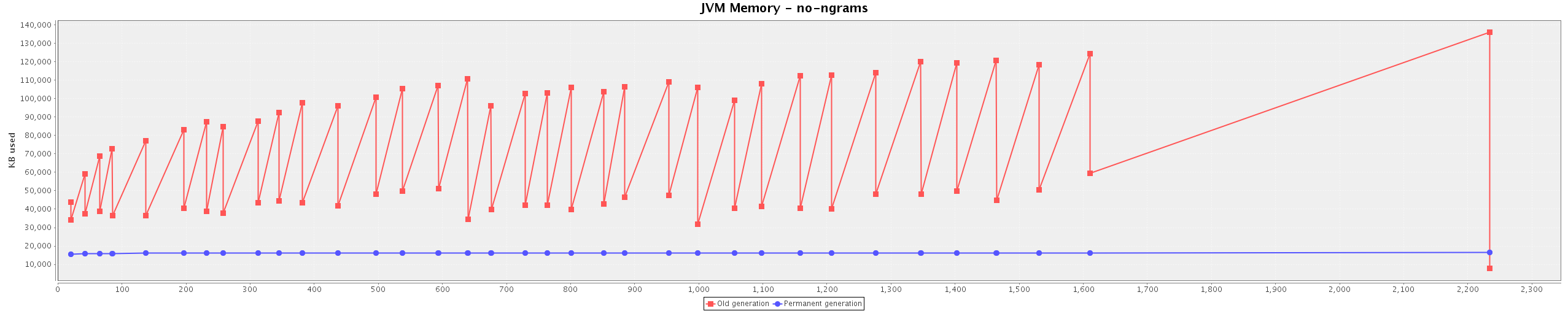

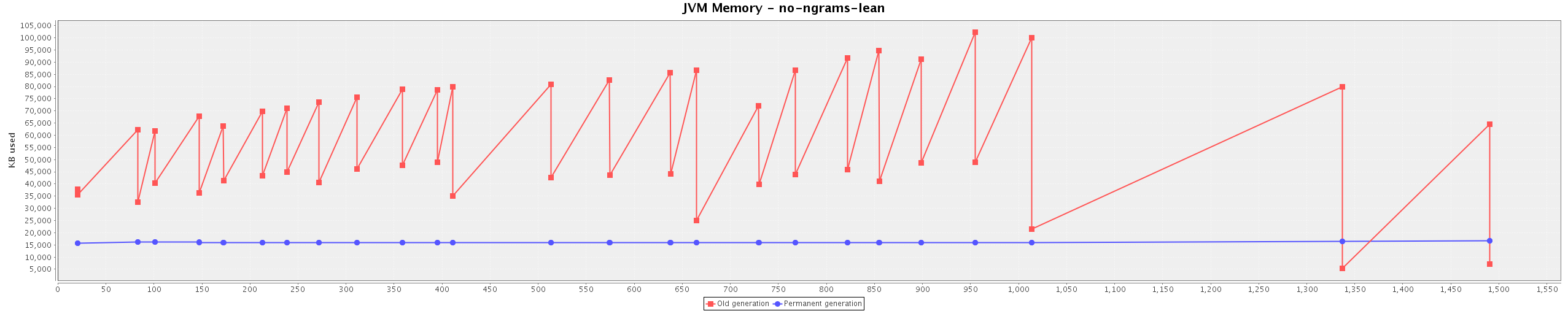

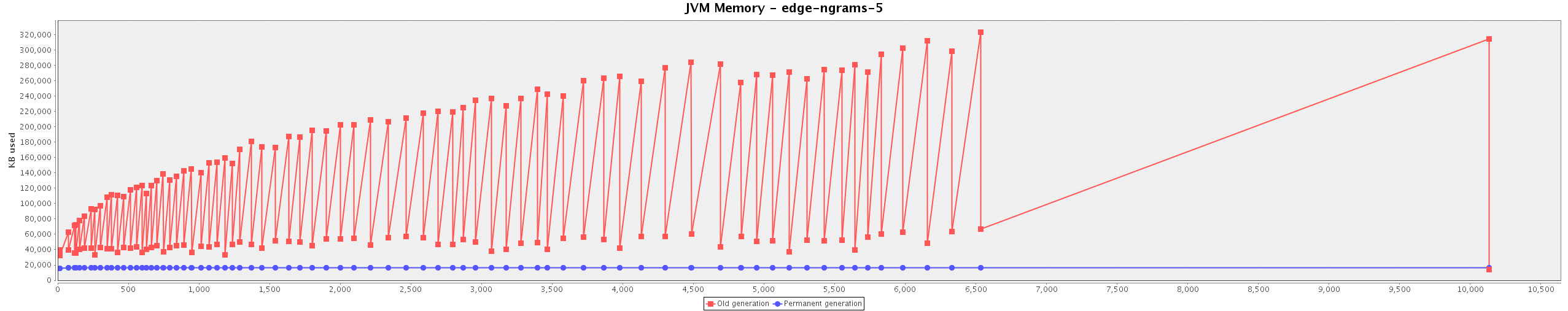

All tests show a similar pattern: reasonable GC activity while

indexing, reduced while queries are running. Memory

usage seems mostly unchanged by the different schema definitions.

Note: for the ngrammed indexes, the ngram field was queried by repeating the original query (against the content) field and joining with OR. E.g.: content:fish OR ngram:fish.

5 concurrent searchers performing 2,000 queries each:

| wildcards-lean | ngrams-5 | ngrams-5-lean | no-ngrams | no-ngrams-lean | edge-ngrams-5 | |

|---|---|---|---|---|---|---|

| Queries per second: | 7 | 11 | 16 | 27 | 30 | 9 |

| Average query response time (ms): | 699 | 393 | 272 | 159 | 148 | 450 |

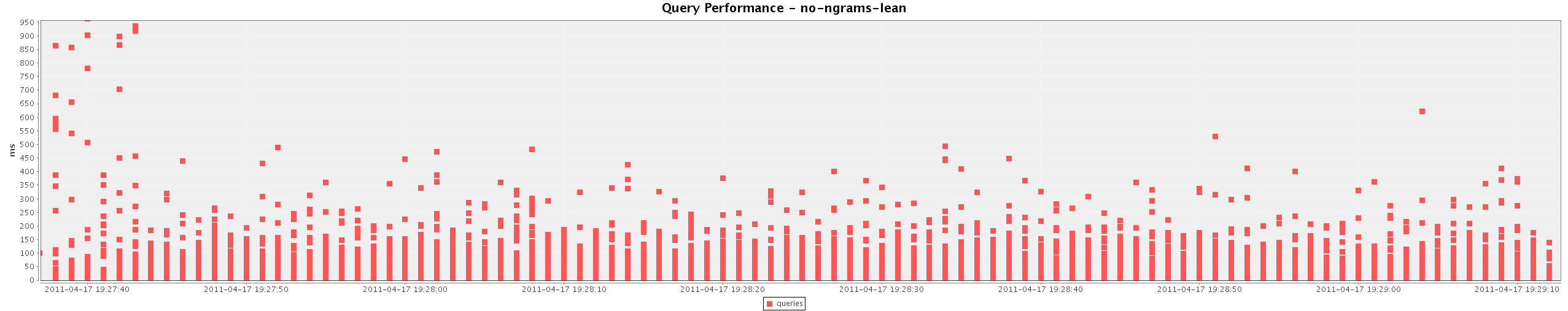

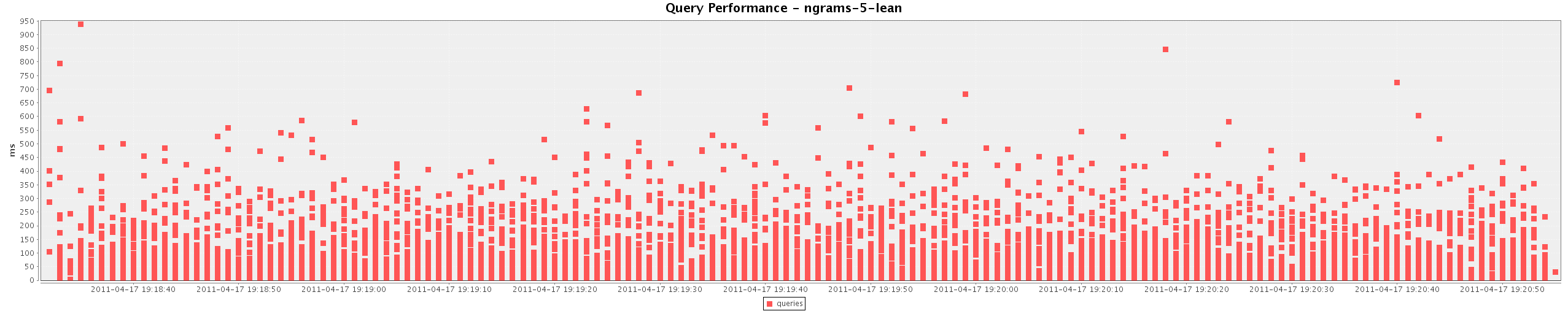

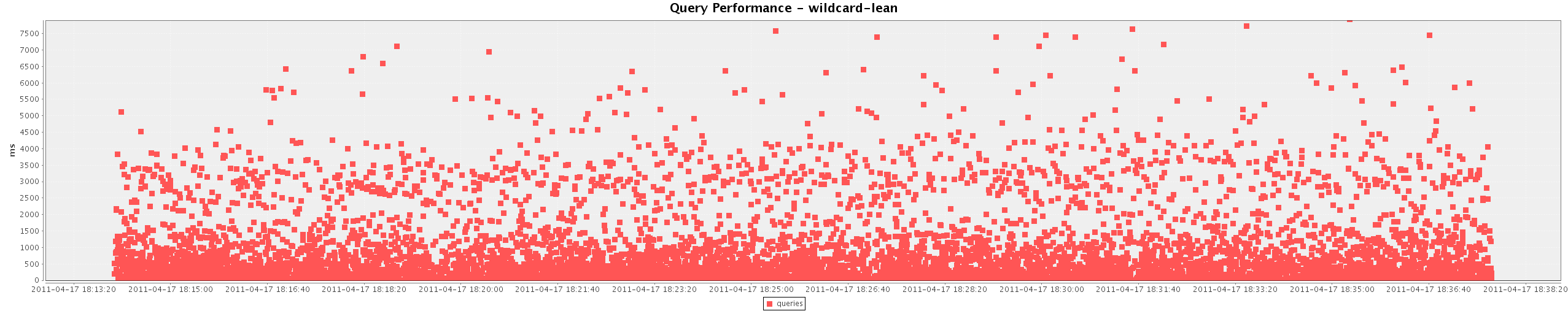

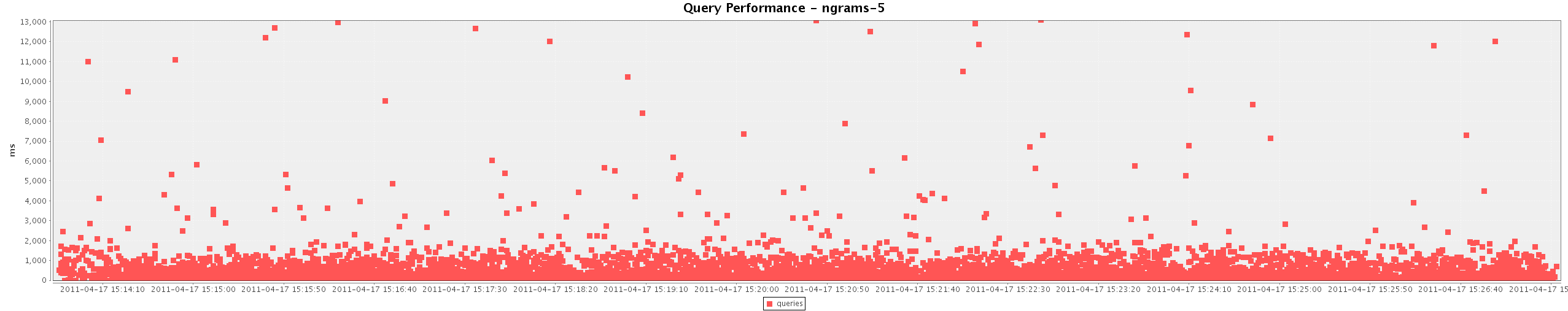

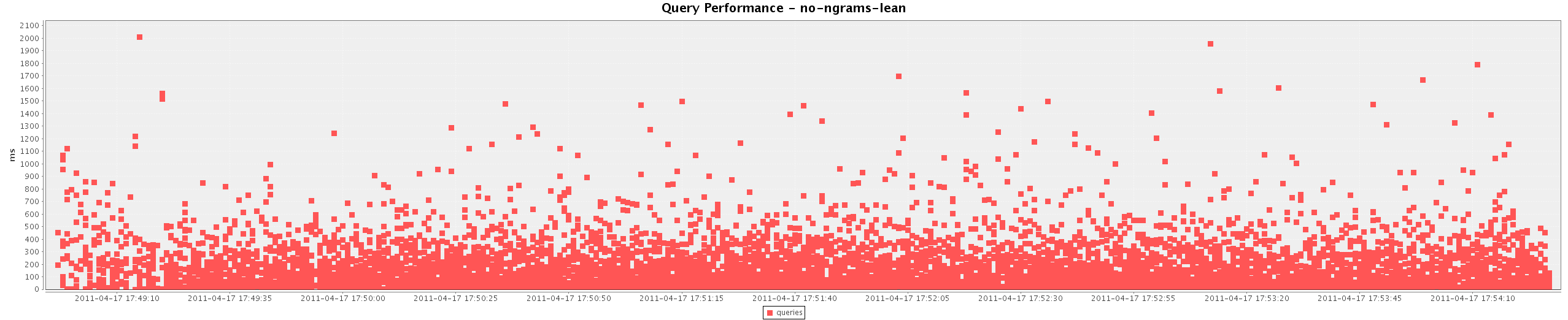

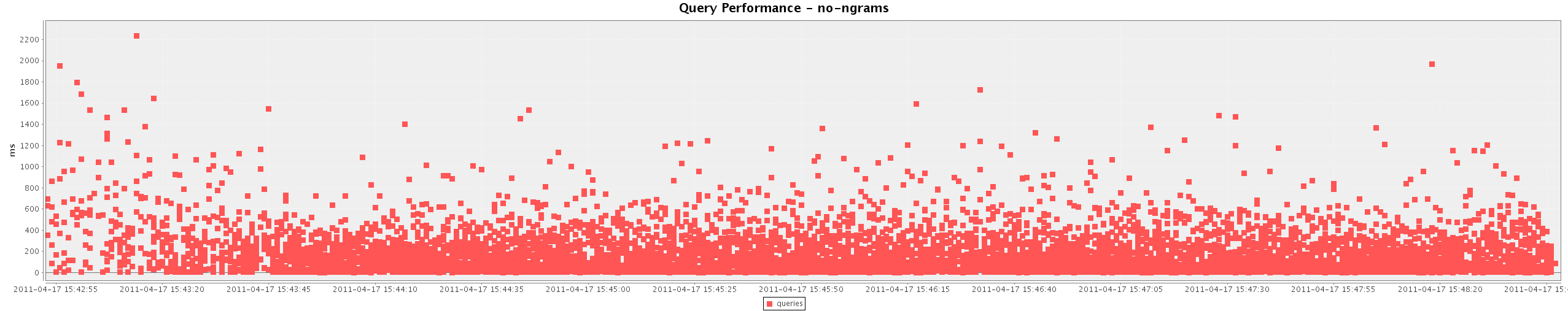

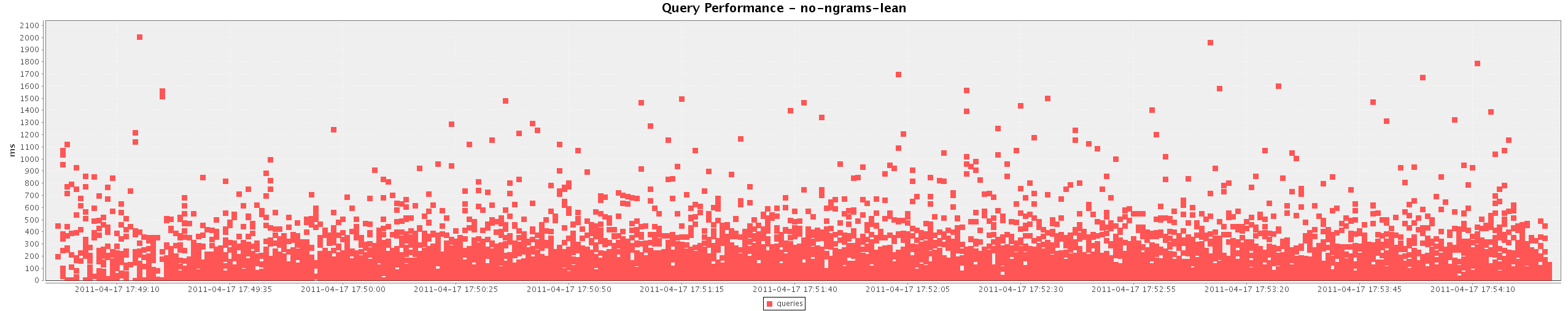

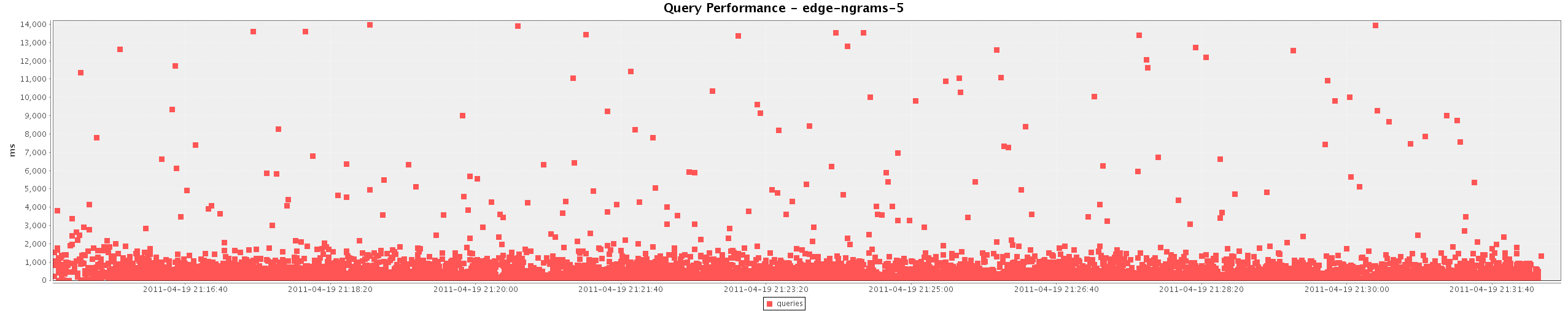

Each point below represents the response time of a single query:

As you would expect, adding ngrams has slowed query performance, but I think there are two effects contributing to that slowdown:

To better isolate the query-time overheads caused by ngrams from the performance hit caused by growing index sizes, I repeated the tests for ngrams-5-lean and no-ngrams-lean using only 250,000 documents for testing. The indexing stats were:

| Indexing time: | 802 seconds |

|---|---|

| Total index size: | 1.4GB |

| Indexing time: | 235 seconds |

|---|---|

| Total index size: | 571MB |

Both indexes are small enough to fit comfortably in the OS cache, so the performance hit caused by disk IO should be reduced.

Query performance against these two indexes (and throwing in our wildcards-lean baseline for good measure):

| ngrams-5-lean | no-ngrams-lean | wildcards-lean | |

|---|---|---|---|

| Queries per second: | 66 | 105 | 26 |

| Average query response time (ms): | 66 | 41 | 185 |

and their query performance graphs: